Train your SLM with distil-cli Claude Skill

You need a model that converts natural language to SQL. Your data is sensitive, so you can't send queries to external APIs so it has to run locally. You grab an off-the-shelf small model, try it out, and... it's just not good enough. The SQL is malformed, the joins are wrong, the column names are hallucinated.

You could fine-tune something yourself, but now you're deep in PyTorch tutorials and wondering what CUDA error: device-side assert triggered means. And even if you get a tutorial working, you need thousands of labeled examples which means weeks of data wrangling and labeling before you can even start training.

What if you could just describe what you need to Claude, hand it a few examples, and go make dinner? When you come back, there's a model on your laptop that does exactly what you asked for.

In this post, we will do exactly that and train a Text2SQL model in a single conversation. We'll start with a bunch of unstructured data, use the Distil Labs Claude skill to handle the heavy lifting, and end up with a working model running locally. You can follow this walkthrough along, all the artifacts we used are in the example repo.

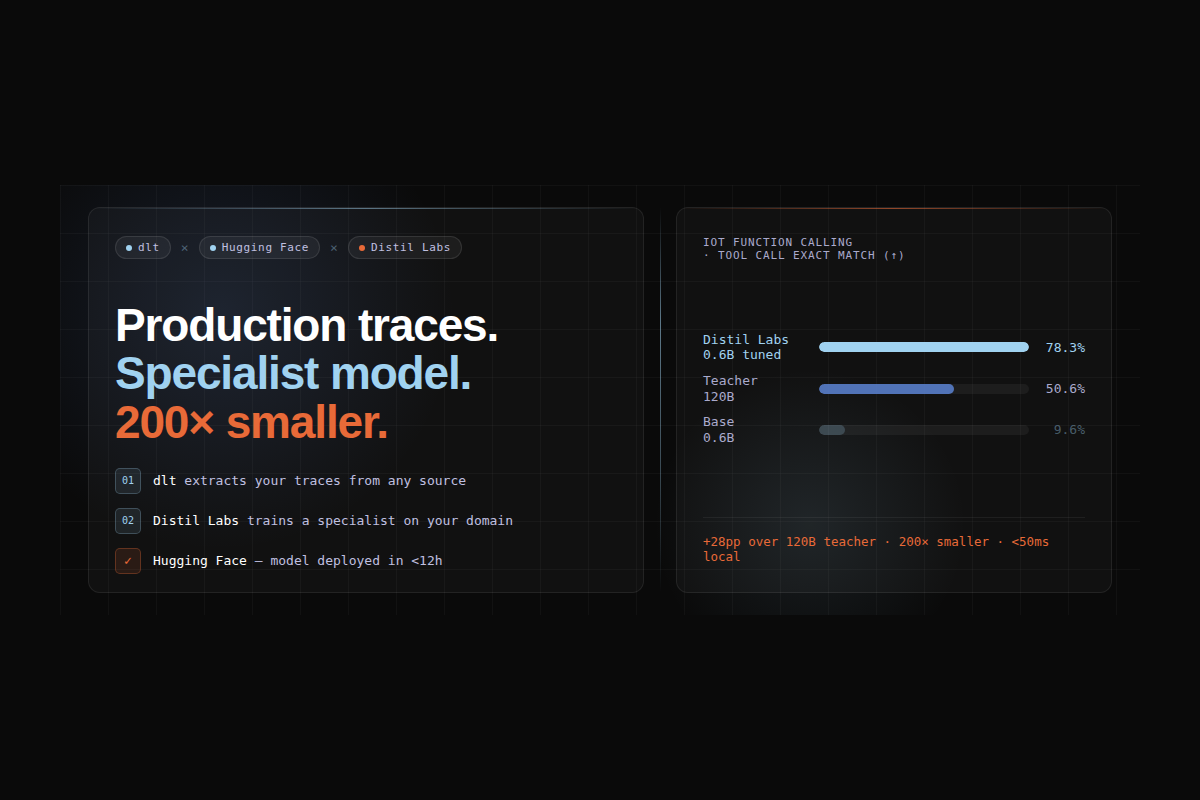

What is Distil Labs?

Distil Labs is a platform for training task-specific small language models. We use knowledge distillation to compress what large models know into tiny, specialized models. The result: models 50-400x smaller than current state-of-the-art LLMs that maintain comparable accuracy and run entirely on your machine. Check out our docs if you want to dive deeper.

Getting Started

Distil Labs gives you two ways to train models: the distil CLI for full control, or a Claude skill for a conversational workflow. The CLI is scriptable and automatable. The Claude skill lets you describe what you need and handles the rest, guiding you through task selection, data preparation, and execution.

We'll use the Claude skill for this tutorial. Here's the setup:

Install the Distil CLI

curl -fsSL https://cli-assets.distillabs.ai/install.sh | sh

distil login # or distil signup if you're new

Install the Claude Skill

Follow the instructions from distil-cli-skill. For Claude Code:

/plugin marketplace add https://github.com/distil-labs/distil-cli-skill

/plugin install distil-cli@distil-cli-skill

With that done, let's train a model.

Training a Text2SQL Model

To get started, I asked Claude to explain the distil-cli training process:

> Hey Claude, we will be training an SLM to translate text2sql

> using distil-cli-skill. Can you first briefly explain the workflow to me?

⏺ Distil CLI Workflow for Training SLMs

Here's the core workflow for training a text-to-SQL model:

1. Create a Model

distil model create my-text2sql-model

2. Select Task Type

For text-to-SQL, you'd probably use Question Answering

3. Prepare Data Files

| File | Purpose |

|----------------------|--------------------------------------|

| job_description.json | Task objectives and configuration |

| config.yaml | Task type and model settings |

| train.csv | 20+ labeled (question, SQL) pairs |

| test.csv | Held-out evaluation set |

4. Upload Data

distil model upload-data <model-id> --data ./my-data-folder

5. Teacher Evaluation (validation step)

distil model run-teacher-evaluation <model-id>

6. Train the Model

distil model run-training <model-id>

7. Download & Deploy

distil model download <model-id>

Selecting the Right Task Type

Distil Labs supports several task types: Classification, Question Answering, Tool Calling, and RAG. First order of business is picking the right task for our problem

> I am looking to train a model that can take in the SQL schema

> together with my question as input and return an SQL query as output.

⏺ Based on the Distil CLI documentation, Question Answering is the

right task type for text-to-SQL. It's designed for text transformations

where you take text input and produce text output - exactly what you need.

You can think of Question Answering as a generic text transformation text-in/text-out task (we have a different taks for training RAG models). The model learns to generate the right answer given an input.

Preparing the Data

To start with, I had about 100 of conversations with other LLMs where I'd asked questions and gotten SQL queries back. Not really a large curated dataset but traces you accumulate when working with databases (you can see those in the example repo). I asked Claude to start with those and help me create a distil-labs input:

> ok perfect, lets use the data I have in conversations directory

> to create the job description, test and train datasets.

> Split the conversations into test and train 50/50

⏺ Your data is in conversation format with 100 conversations

examples. I need to split the data and convert it to Distil's

question/answer format. Let me create the required files:

⏺ Write(data/job_description.json)

⏺ Write(data/config.yaml)

⏺ Bash(python3 << 'EOF' import json...)

⎿ Conversion complete!

⏺ All files are ready in the data/ directory:

| File | Description |

|----------------------|--------------------------------------|

| job_description.json | Task description for text-to-SQL |

| config.yaml | Config with Qwen3-0.6B student model |

| train.jsonl | 50 training examples |

| test.jsonl | 50 test examples |

> Hey I want to use deepseek 3.1 as the teacher model

⏺ Update(data/config.yaml)

⎿ Updated teacher_model_name to deepseek-v3.1

That's all the prep work. Claude handled the file format conversion, I just pointed it at my raw data. You can see the generated files in the example repo.

Running Teacher Evaluation

Before spending hours on training, we validate that a large model can actually solve this task:

> ok lets create a model and then kick off teacher evaluation

⏺ Bash(distil model create text2sql-demo-deepseek)

⎿ ID: bf961e16-8b92-4ca9-9187-a1f53eb7620f

⏺ Bash(distil model upload-data bf961e16... --data data/)

⎿ Upload successful.

⏺ Bash(distil model run-teacher-evaluation bf961e16...)

⎿ Kicked off teacher evaluation

After about 2 minutes:

⏺ Teacher evaluation completed successfully!

| Metric | Score |

|----------------------|--------|

| Rouge | 89.36% |

| Meteor | 88.31% |

| Binary (Exact Match) | 52.00% |

| LLM-as-a-Judge | 80.00% |

Interpretation:

- Rouge/Meteor (high): Teacher generates SQL queries with very similar structure to reference answers

- Binary (52%): About half match exactly - expected since SQL can be written multiple valid ways

- LLM-as-a-Judge (80%): When judged semantically, 80% of teacher outputs are correctClaude helped me interpreted the results: 80% LLM-as-a-Judge score means the teacher's outputs are semantically correct which indicates green light to proceed.

Training the Model

> ok lets kick off the training now

⏺ Bash(distil model run-training bf961e16...)

⎿ Kicked off SLM training ID 5a91464d-22e7-4aed-8e10-dc48608bca43

⏺ Bash(distil model training bf961e16...)

⎿ Training ID: 5a91464d-22e7-4aed-8e10-dc48608bca43

Status: ◐ Distilling

Training takes a few hours. Behind the scenes, the teacher model generates synthetic training data from our examples, validates it for quality, and the student model learns from it. Time to make dinner 🍕.

Training Results

When training finishes, we can inspect the results:

> OK the model is trained, lets check the results

⏺ Bash(distil model show bf961e16...)

⎿ ID: bf961e16-8b92-4ca9-9187-a1f53eb7620f

Name: text2sql-demo-deepseek

Evaluation Results

| Metric | Score |

|----------------|--------|

| Rouge | 88.46% |

| Meteor | 88.47% |

| Binary | 40.00% |

| LLM-as-a-Judge | 74.00% |74% LLM-as-a-Judge on a 0.6B model, that’s very close to the teacher's 80%! Time to download and use it.

Downloading the Model

We can download the model directly to our working directory to embed in into our workflow:

> lets download the model locally and unpack what we get

⏺ Bash(distil model download bf961e16...)

⎿ Downloaded model to bf961e16...-model.tar

⏺ Here's what you get when downloading a trained model:

downloaded-model/

├── model.gguf (2.2 GB) - Quantized model for Ollama

├── Modelfile (1.6 KB) - Ollama configuration

├── model_client.py (2.6 KB) - Python client to invoke the model

├── README.md (2.4 KB) - Deployment instructions

├── model/ # Full precision model (HuggingFace format)

└── model-adapter/ # LoRA adapter only (35 MB)

Once the model is here, we have four deployment options: Ollama, llamacpp, or transformers serve for quick local use or vLLM for production.

Building an App

For the finale, I asked Claude to build something useful:

> write a small app that will let us query a csv table with natural language.

> Deploy the model using ollama and use the model client file to query it

⏺ Bash(ollama create text2sql -f Modelfile)

⎿ gathering model components ✓

⏺ Update(model_client.py)

⎿ Added 1 line, removed 1 line

⏺ Write(app.py)

⎿ Wrote 173 lines to app.py

Claude wrote an app.py that loads CSV files into SQLite and uses our model to convert questions to SQL. To make testing easier, we used example data containing two simple tables to test our final application:

⏺ Bash(python app.py --csv employees.csv \

--question "What is the average salary per department?" --show-sql)

⎿ Generated SQL: SELECT department, AVG(salary) FROM employees GROUP BY department;

department AVG(salary)

Engineering 87500.000000

Marketing 58333.333333

Sales 68333.333333

It works. Natural language in, correct SQL out, results back.

The Results

What We Built

A task-specific Text2SQL model running entirely on a laptop. No GPU required. No API calls. The model is 0.6B parameters and about 2GB on disk.

The full code, training data, and resulting app are available at github.com/distil-labs/distil-example-text2sql-with-claude.

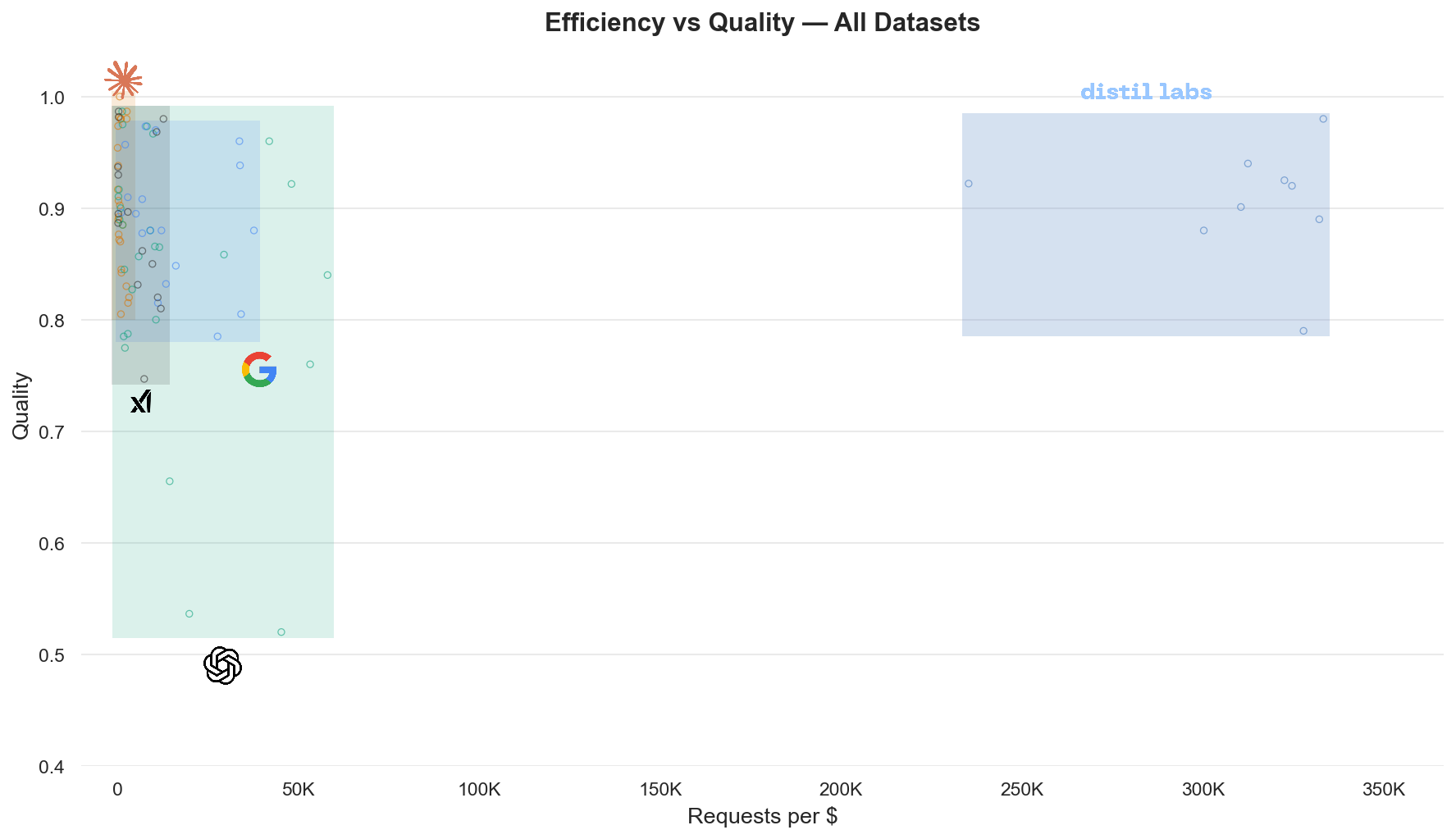

The Numbers

We evaluated the base model, the teacher model (DeepSeek-V3), and our fine-tuned model on a held-out test set:

The fine-tuned model matches teacher performance while being a fraction of the size and running locally.

Before and After

Here's what the base Qwen3 0.6B model produces vs our fine-tuned version:

Question: Which artists have total album sales over 1 million?

-- Base model: Complete hallucination, ignores the question entirely

SELECT artists.name FROM artists

WHERE artists.genre IS NULL OR artists.country IS NULL;

-- Fine-tuned model: Correct JOIN, GROUP BY, and HAVING

SELECT a.name FROM artists a

JOIN albums a_album ON a.id = a_album.artist_id

GROUP BY a.id, a.name HAVING SUM(a_album.sales) > 1000000;

Question: How many applicants applied for each position?

-- Base model: Misunderstands the question, counts positions instead of applicants

SELECT COUNT(DISTINCT position) AS num_applicants FROM applicants;

-- Fine-tuned model: Correct GROUP BY aggregation

SELECT position, COUNT(*) AS applicant_count FROM applicants GROUP BY position;

The pattern with the base model: missing GROUP BY clauses, WHERE instead of HAVING, wrong aggregations. It knows SQL syntax but can't reliably translate intent into correct queries. After fine-tuning, the model handles these cases correctly.

Get Started

# Install the CLI

curl -fsSL https://cli-assets.distillabs.ai/install.sh | sh

distil signup

# Add the Claude skill

# Then just ask Claude to help you train a model

Resources

We built a custom AI model by having a conversation. No infrastructure. No ML expertise. Just Claude, a few examples, and an evening. And honestly? It was fun.

.png)