TL;DR

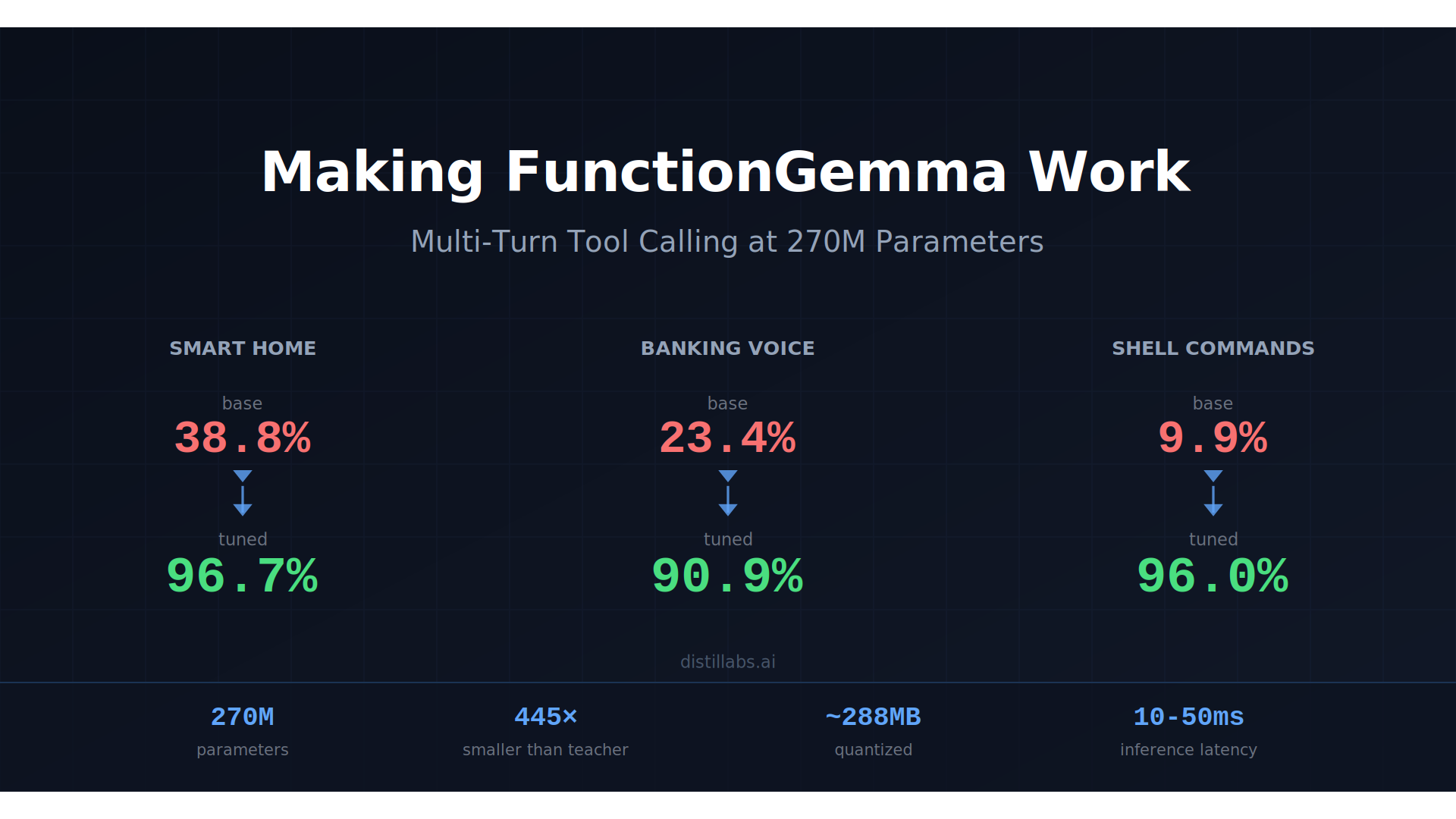

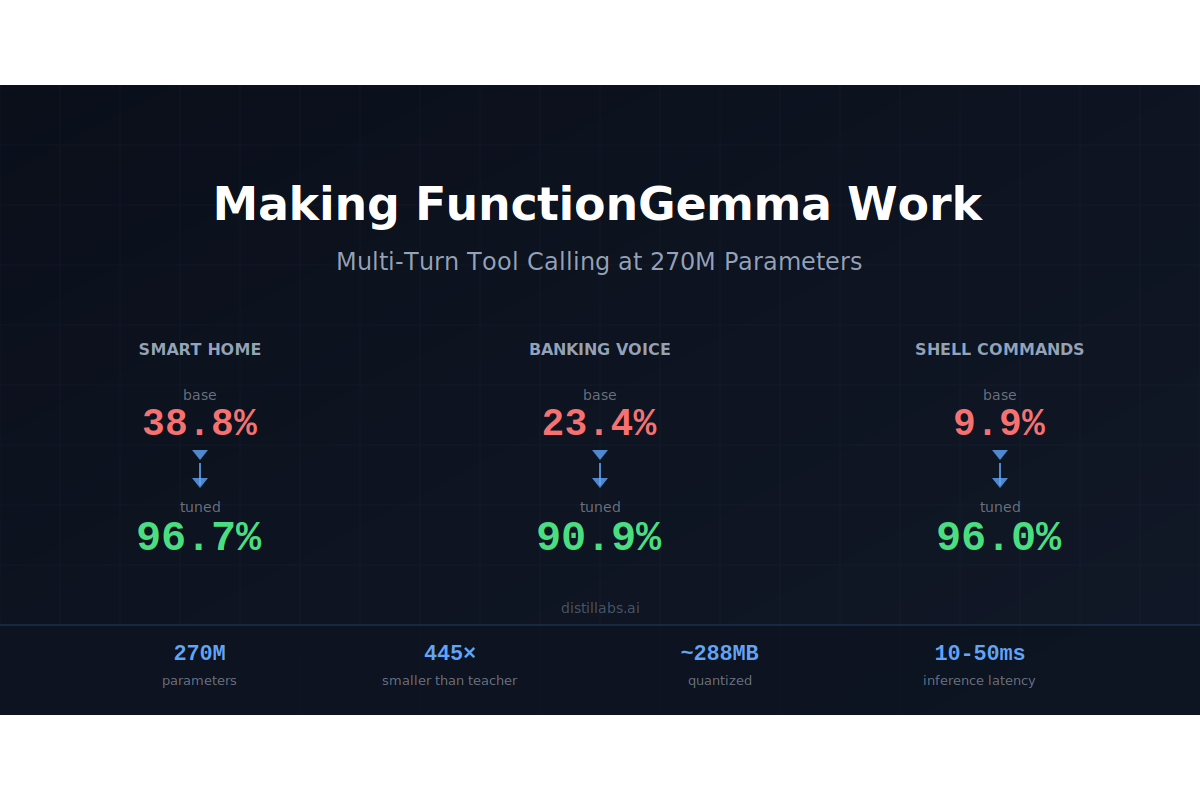

- FunctionGemma is not trained out of the box for multi-turn tool calling, scoring 10-39% tool call equivalence across three benchmarks. Google says so themselves: the model is "intended to be fine-tuned for your specific function-calling task, including multi-turn use cases."

- After fine-tuning with Distil Labs, it hits 90-97%, matching or exceeding a 120B teacher model while staying at 270M parameters (~288MB quantized).

- Three tasks, same story: smart home control (38.8% → 96.7%), banking voice assistant (23.4% → 90.9%), and shell command execution (9.9% → 96.0%).

- If you have a multi-turn function calling task, start training your own model with a prompt and a few examples.

FunctionGemma: Small, Specialized, and Incomplete

Google's FunctionGemma is one of the most exciting small models released in 2025. At 270M parameters, it's purpose-built for function calling and small enough to run on a phone CPU at 125 tokens per second with a ~288MB footprint. That combination of size and specialization makes it uniquely interesting for embedded systems, mobile apps, voice assistants, and anywhere else where you need structured tool calling but can't afford to ship a multi-billion parameter model.

There is a caveat, though. Right on the model card, Google notes:

FunctionGemma is intended to be fine-tuned for your specific function-calling task, including multi-turn use cases.

Our benchmarks confirm this. Across three different multi-turn tool calling tasks, base FunctionGemma scores between 9.9% and 38.8% on tool call equivalence, making it unusable for any real application that involves back-and-forth dialogue. The good news is that FunctionGemma is an excellent starting point. Using the Distil Labs platform, we fine-tuned it on three multi-turn tasks and consistently pushed accuracy above 90%, matching or exceeding a 120B teacher model. This post walks through the results and what it took to get there.

The Multi-Turn Problem

While most small models can handle single-turn function calling reasonably well (user says something, model outputs a structured tool call), multi-turn function calling is a fundamentally harder problem. The model needs to track conversation history, carry forward previous function calls, handle intent changes mid-conversation, and produce valid structured output at every single turn.

The difficulty compounds multiplicatively: if a model gets 80% of individual tool calls correct, its probability of getting every call right across a 5-turn conversation drops to roughly 33% (0.8^5), and even at 95% single-turn accuracy, you only reach 77% over five turns. Every percentage point matters enormously once you start chaining turns together, which is why base FunctionGemma's scores make it not suitable:

At these levels, the model fails more often than it succeeds on even a single turn, and multi-turn conversations are effectively impossible. The failure modes are consistent across tasks: generating free text instead of structured JSON, hallucinating function names not in the catalog, losing slot values across turns, and producing malformed output that breaks downstream parsing.

How to Address It

We used the Distil Labs platform to fine-tune FunctionGemma on three multi-turn tool calling tasks. The pipeline follows the same approach we use across all our models: define the task with a prompt and examples, generate synthetic training data using a large teacher model, validate and filter that data, then fine-tune the student.

Setup

For the smart home and voice assistant tasks, we used existing datasets that we had previously built for those domains. For the shell command task, we generated 5,000 synthetic training examples from seed data using the full Distil Labs pipeline. In all three cases, FunctionGemma was trained independently from scratch on the dataset.

The results confirm that the key ingredient is high-quality task-specific data. The same curated dataset that produces a strong Qwen3-0.6B model also produces a strong FunctionGemma model, without any model-specific adjustments.

Evaluation Metrics

We report two metrics across all tasks:

- Tool Call Equivalence: Does the predicted tool call exactly match the reference? This is the primary metric. Predicted and reference outputs are converted to Python

dictobjects and compared using equality. It's binary: 0 (no match) or 1 (exact match). - ROUGE: Surface-level text similarity between predicted and reference outputs. Useful as a secondary signal but less informative for structured outputs than exact match.

Results: From Unusable to Production-Ready

Smart Home Control

Dataset: distil-labs/distil-smart-home | Model: distil-labs/distil-home-assistant-functiongemma

Banking Voice Assistant

Dataset: distil-labs/distil-voice-assistant-banking

Shell Command Execution (Gorilla)

Dataset: distil-labs/distil-SHELLper

Discussion

Across all three tasks, the pattern is consistent:

- Base FunctionGemma is unusable for multi-turn tool calling (10-39% accuracy).

- Fine-tuned FunctionGemma matches or exceeds the 120B teacher on two out of three tasks, and comes within 6 points on the third.

- The model is 445× smaller than the teacher (270M vs 120B parameters), enabling deployment in constrained environments like in-browser inference or on a single CPU core, with latency in the 10-50ms range.

The voice assistant task is the only case where the tuned model doesn't fully close the gap with the teacher (90.86% vs 96.95%). This task involves 14 distinct banking functions with complex slot types and ASR transcription artifacts in the input, making it the most demanding of the three. Even so, 90.86% represents a massive improvement over the 23.35% baseline and is a viable starting point for production use with an orchestrator handling edge cases.

Task Breakdown

Task 1: Smart Home Control

What it does: Converts natural language commands ("turn off the kitchen lights," "set the thermostat to 72") into structured function calls for a smart home system. Handles multi-turn conversations where users adjust commands, ask about device states, or issue sequences of actions.

Function catalog: Device control operations including light management, thermostat adjustment, lock control, and scene activation.

Why it's hard: Users issue commands in varied and informal language, often referencing previous turns ("turn that one off too"). The model needs to resolve ambiguous references and maintain context about which devices have been discussed.

Result: The tuned model hits 96.71% tool call equivalence, exceeding the 120B teacher (92.11%). This is the strongest result across all three tasks, likely because the function catalog is relatively constrained and the input language patterns are consistent.

Models: Safetensors + GGUF | GGUF only

Task 2: Banking Voice Assistant

What it does: Routes customer requests to the correct banking function (check balance, transfer money, cancel card, report fraud, etc.) while extracting required parameters like account types, amounts, and card numbers. Sits behind an ASR module in a full voice pipeline.

Function catalog: 14 banking operations including check_balance, transfer_money, pay_bill, cancel_card, replace_card, report_fraud, and more.

Why it's hard: This is the most demanding task for several reasons. The function catalog is large (14 operations with varied slot types), the training data includes ASR transcription artifacts (filler words, homophones, word splits) that add noise, and users frequently change intents mid-conversation ("Actually, what's my balance?" after starting a card cancellation).

Result: The tuned model reaches 90.86% tool call equivalence, a massive jump from 23.35% but still 6 points below the teacher's 96.95%. The remaining gap likely comes from the combination of ASR noise and the larger function catalog. For production use, the orchestrator's slot elicitation loop catches most remaining errors by asking clarifying questions.

Training data: distil-labs/distil-voice-assistant-banking

Task 3: Shell Command Execution (Gorilla)

What it does: Converts natural language requests into bash commands via structured tool calls. Based on the Berkeley Function Calling Leaderboard Gorilla file system task, simplified to one tool call per turn.

Function catalog: Standard file system operations (ls, cd, mkdir, rm, cp, mv, find, grep, cat, etc.) mapped from the Gorilla function definitions.

Why it's hard: This task covers diverse command patterns and requires the model to correctly map informal language to specific command parameters. The base model's 9.9% score confirms this is a genuinely difficult task for a 270M model without training.

Result: The tuned model achieves 96.04% tool call equivalence, essentially matching the 120B teacher (97.03%). This is the most dramatic improvement of the three: from 9.9% to 96.0%, nearly a 10× jump in accuracy.

Training data & demo: distil-labs/distil-SHELLper

Train Your Own

The workflow we used for FunctionGemma is generic across multi-turn tool calling tasks. If you have a set of functions you want a small model to call reliably, here's how to get started:

1. Define your tool schema

Specify the functions and their argument schemas. Be specific about argument types, required fields, and allowed values. See the smart home training data or voice assistant training data for the format.

2. Create seed examples

Write 20-100 example conversations covering your functions, including multi-turn slot filling, intent changes, and error recovery. If your model sits behind ASR, include transcription artifacts in the training data.

3. Train with Distil Labs

curl -fsSL <https://cli-assets.distillabs.ai/install.sh> | shdistil login

distil model create my-function-calling-model

distil model upload-data <model-id> --data ./data

distil model run-training <model-id>

distil model download <model-id>You can also use the Distil CLI Claude Code skill to train models directly from Claude Code or Claude.ai.

4. Deploy

The trained model works with Ollama, vLLM, llama.cpp, or any inference runtime that supports GGUF or Safetensors. For the quantized GGUF variant, you get the full model in under 300MB.

If you have a task in mind, start with a short description and a handful of examples; we'll take it from there. Visit distillabs.ai to sign up and start training, or join our Slack community to ask questions.

Datasets, training data, and trained models are linked throughout the post. For more on how the Distil Labs platform works, see our platform introduction and benchmarking post.