We benchmarked 12 small language models across 8 tasks to find the best base model for fine-tuning

TL;DR

Fine-tuned SLMs can outperform much larger models: fine-tuned Qwen3-4B matches or exceeds GPT-OSS-120B (a 30× larger teacher) on 7 of 8 benchmarks, with the remaining one within 3 percentage points. On the SQuAD 2.0 dataset, the fine-tuned student surpasses the teacher by 19 points. This means you can get frontier-level accuracy at a fraction of the cost, running entirely on your own hardware.

Best fine-tuned performance: Qwen3 models consistently deliver the strongest results after fine-tuning, with the 4B version performing best overall. If your goal is maximum accuracy on a specific task, Qwen3-4B is your starting point.

Most tunable (🐟-ble) (biggest gains from fine-tuning): Smaller models improve dramatically more than larger ones. If you're constrained to very small models (1B-3B), don't worry - they benefit most from fine-tuning and can close much of the performance gap to their larger counterparts.

Introduction

If you're building AI applications that need to run on-device, on-premises, or at the edge, you've probably asked yourself: which small language model should I fine-tune? The SLM landscape is crowded (Qwen, Llama, Gemma, Granite, SmolLM) and each family offers multiple size variants. Picking the wrong base model can mean weeks of wasted compute or a model that never quite hits production quality.

We ran a systematic benchmark to answer this question with data. Using the distil labs platform, we fine-tuned 12 models across 8 diverse tasks (classification, information extraction, open-book QA, and closed-book QA), then compared their performance against each other and against the teacher LLM used to generate synthetic training data.

This post answers four practical questions:

- Which model produces the best results after fine-tuning?

- Which model is the most tunable? (i.e., gains the most from fine-tuning)

- Which model has the best base performance? (before any fine-tuning)

- Can our best student actually match the teacher?

Methodology

We evaluated the following models:

- Qwen3 family: Qwen3-8B, Qwen3-4B-Instruct-2507, Qwen3-1.7B, Qwen3-0.6B. Note that we turned off thinking for this family to ensure an even playing field.

- Llama family: Llama-3.1-8B-Instruct, Llama-3.2-3B-Instruct, Llama-3.2-1B-Instruct

- SmolLM2 family: SmolLM2-1.7B-Instruct, SmolLM2-135M-Instruct

- Gemma family: gemma-3-1b-it, gemma-3-270m-it

- Granite: granite-3.3-8b-instruct

For each model, we measured:

- Base score: Few-shot performance with prompting alone

- Finetuned score: Performance after training on synthetic data generated by our teacher (GPT-OSS 120B)

Our 8 benchmarks span classification (TREC, Banking77, Ecommerce, Mental Health), document understanding (docs), and question answering (HotpotQA, Roman Empire QA, SQuAD 2.0). For details on the evaluation setup, see our platform benchmarking post.

Results aggregation: To create fair measurement, we ranked models on each benchmark individually, then computed average ranks across all tasks and plotted the 95% confidence interval as error bars. Lower average rank = better overall performance.

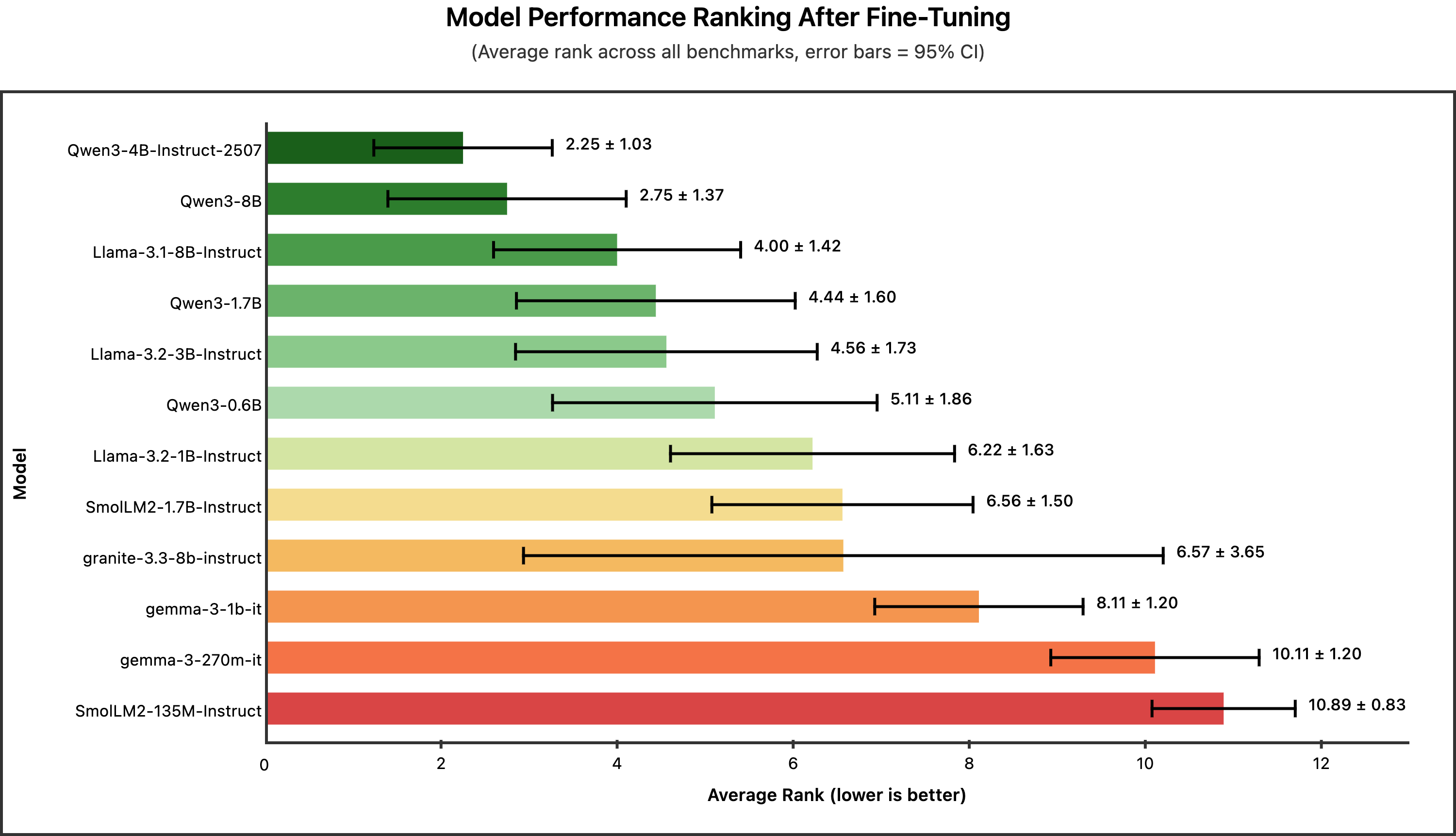

Question 1: Which Model Produces the Best Results After Fine-Tuning?

Winner: Qwen3-4B-Instruct-2507 (average rank: 2.25)

The Qwen3 family dominates the top spots, with Qwen3-4B-Instruct-2507 taking first place. Notably, this 4B model outranks the larger Qwen3-8B, suggesting that for distillation tasks, the more recent version of Qwen3 (July 25 update) yields the best result than the previous 8B SLM.

Key takeaway: If you want the best possible fine-tuned model and have GPU memory for ~4B parameters, Qwen3-4B-Instruct-2507 is your top choice.

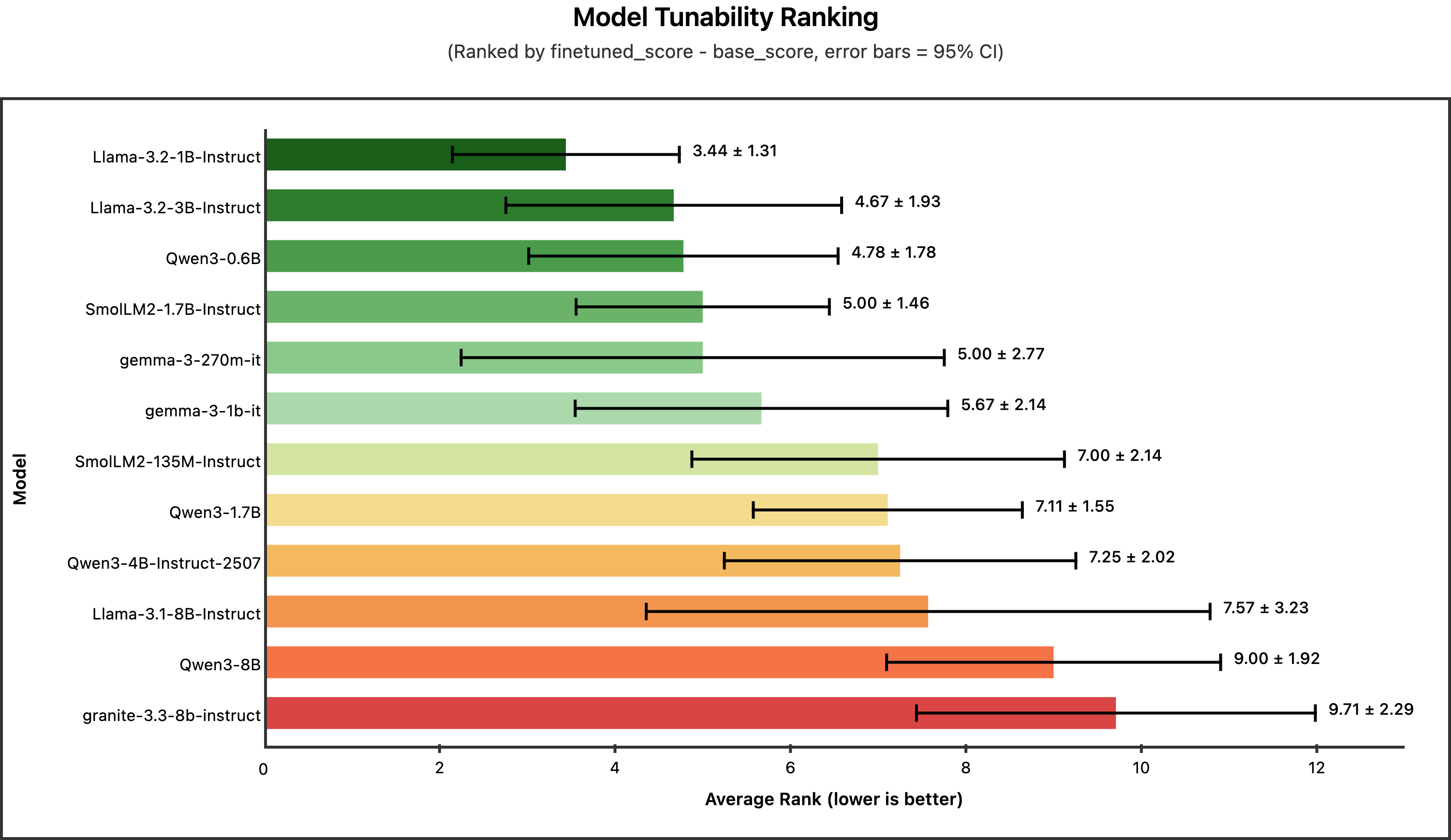

Question 2: Which Model is the Most Tunable?

Winner: Llama-3.2-1B-Instruct (average rank: 3.44)

Here we measure tunability—the improvement from base to fine-tuned performance (finetuned_score - base_score). A highly tunable model starts weaker but gains dramatically from fine-tuning.

Interestingly, the tunability ranking inverts the size hierarchy. Smaller models like Llama-3.2-1B and Qwen3-0.6B show the largest gains from fine-tuning. The largest models (Qwen3-8B, granite-3.3-8b) rank near the bottom for tunability - not because they're worse, but because they start strong and have less room to improve.

Key takeaway: If you're constrained to very small models (<2B parameters), don't despair. These models benefit most from fine-tuning and can close much of the gap to larger models.

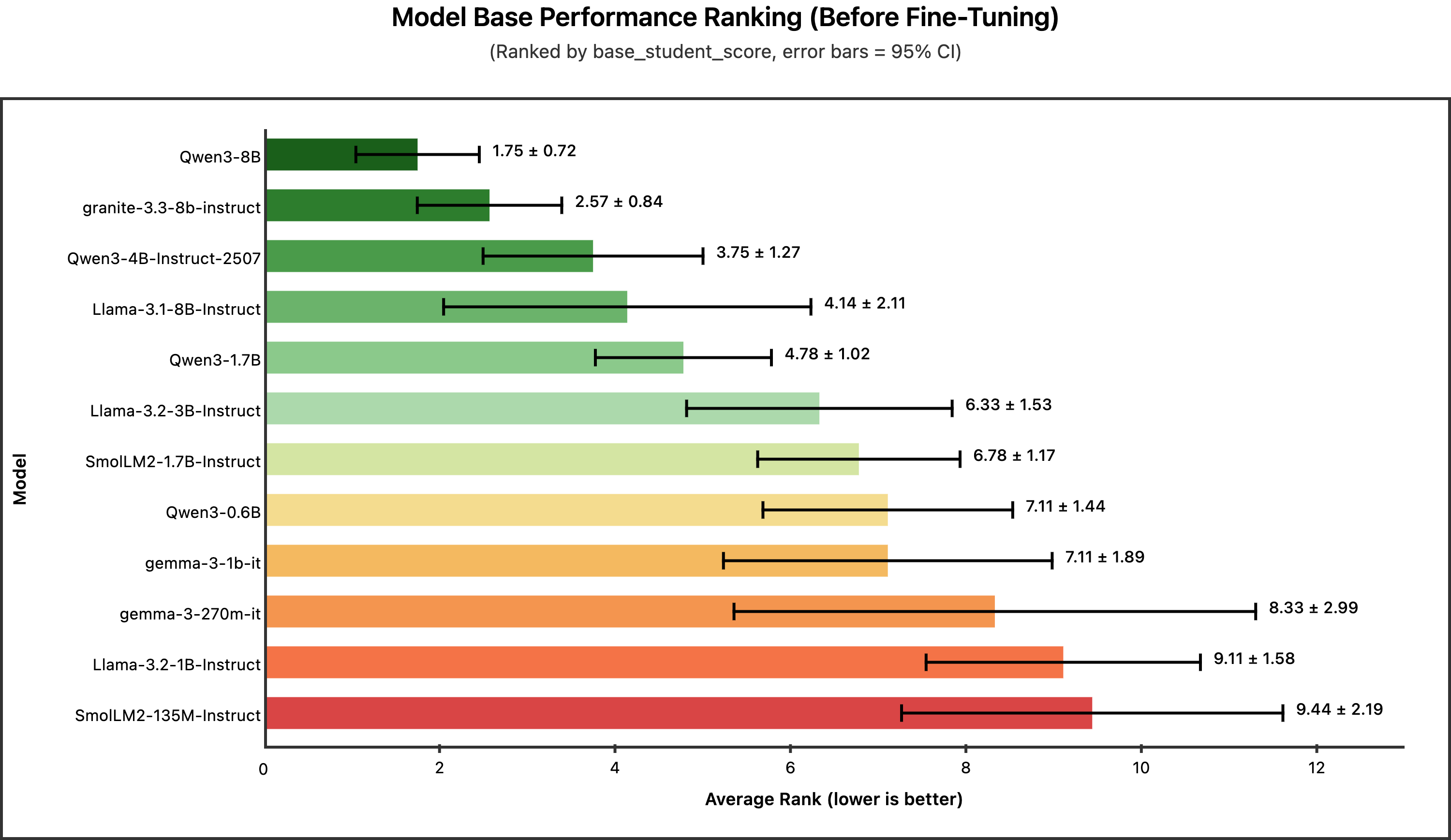

Question 3: Which Model Has the Best Base Performance?

Winner: Qwen3-8B (average rank: 1.75)

Before any fine-tuning, which model performs best out of the box?

As expected, base performance correlates with model size. The 8B models claim the top spots, with Qwen3-8B showing remarkably consistent performance across all benchmarks (lowest standard deviation).

Key takeaway: If you need strong zero-shot/few-shot performance without fine-tuning, larger models are still your best bet. But remember - this advantage shrinks after fine-tuning.

Question 4: Can Our Best Student Match the Teacher?

Yes. Qwen3-4B-Instruct-2507 matches or exceeds the teacher on 7 out of 8 benchmarks.

.png)

The fine-tuned 4B student surpasses the 120B+ teacher on 6 benchmarks, ties on 1 (HotpotQA), and falls slightly short on 1 (Banking77, within margin of error). The most dramatic improvement is on SQuAD 2.0 closed-book QA, where the student exceeds the teacher by 19 percentage points—a testament to how fine-tuning can embed domain knowledge more effectively than prompting.

Key takeaway: A 4B parameter model, properly fine-tuned, can match or exceed a model 30× its size. This translates to ~30× lower inference costs and the ability to run entirely on-premises.

Practical Recommendations

Based on our benchmarks, here's how to choose your base model:

Next Steps

This benchmark is just a starting point, and we are actively working to make these results even more robust:

- Evaluate more models: The SLM landscape evolves rapidly. We plan to add newer releases like Qwen3.5, Phi-4, and Mistral variants as they become available.

- Run more iterations: Currently, our results are averaged over a limited number of runs. We're expanding to more iterations per benchmark to tighten confidence intervals and ensure the rankings are statistically reliable.

- Expand benchmark coverage: We want to include additional task types like summarization, code generation, and multi-turn dialogue to give a fuller picture of model capabilities.

We'll update this post as new data comes in. If there's a specific model or task you'd like us to benchmark, let us know!

Training Details

Each model was fine-tuned on synthetic data generated using our distillation pipeline (see Small Expert Agents from 10 Examples for details on the data generation process). For each benchmark, we generated 10,000 training examples using the teacher model (GPTOss-120B).

Fine-tuning was performed using the default distil labs configuration: 4 epochs with learning rate of 5e-5, linear learning rate scheduler and LoRA with rank 64.

All models were trained with identical hyperparameters. Evaluation was performed on held-out test sets that were never seen during training or synthetic data generation.

Conclusion

Not all small models are created equal but the differences narrow dramatically after fine-tuning. Our benchmarks show that Qwen3-4B-Instruct-2507 delivers the best overall fine-tuned performance, matching a 120B+ teacher while being deployable on a single consumer GPU. For extremely constrained environments, smaller models like Llama-3.2-1B show exceptional tunability and can close much of the gap.

The bottom line: fine-tuning matters more than base model choice. A well-tuned 1B model can outperform a prompted 8B model. If you have a task in mind, start with a short description and a handful of examples - we'll take it from there.

The bottom line: fine-tuning matters more than base model choice. A well-tuned 1B model can outperform a prompted 8B model.

The distil labs platform helps you get the best performance from small models. Start with a task description and a few examples. Our platform handles the rest - data generation, training, and evaluation to deliver a production-ready model.

Want to try this yourself? Sign up for distil labs and train your first expert agent in under 24 hours.

If you have questions about the methodology, want to discuss specific results, or have suggestions for models or tasks we should test next, reach out at contact@distillabs.com

.png)

.svg)